Timekeeping In A 5G World: Coordinated Universal Time Blown Away By Ultra-Precision Time On Tap

New 5G cellular wireless technology will soon transfer data – including the correct time – 50 times faster than existing 4G services. 5G also enables constant internet connection.

These two innovations alone are revolutionizing timing precision. Coordinated Universal Time – widespread as it now is – will pale in comparison to the accuracy possible from new timing sources.

Here is what that means to the timekeeping world.

Coordinated Universal Time (UTC) will soon have a technological competitor that may shake up the world’s timekeeping: the coming 5G cellular wireless technology will bring an unprecedented accuracy to timekeeping.

5G: why should you care?

The concept of 5G cellular wireless technology may be a little blurry to many, and some will no doubt ask why they should care. I’ll explain both.

Essentially, 5G is the fifth generation of cellular network technology. However, it offers not just an upgrade but a quantum leap over 4G technology.

The new 5G wireless already being rolled out by Verizon in select U.S. cities brings three new things to the table: hugely greater data transfer speed, almost real-time responsiveness, and the ability to connect a lot more devices at once.

Consumer benefits of using 5G cellular wireless capabilities include being able to connect extremely small, inexpensive, low-power devices to the internet continuously.

Some may object, saying their Apple Watch Series 4 already has a cellular connection to the internet. True. But what about that smart bandage covering surgical sutures that your doctor can use to determine whether the wound is healing nicely or if it is infected? Or your tiny cardiac pacemaker?

5G technology makes a continuous, real-time connection for monitoring small devices a reality.

Next, 5G technology cuts latency from 50 microseconds to just one microsecond or less. Latency is the elapsed time between when a device asks for information, the request is processed, and the requesting device finally receives the information.

At one microsecond, latency of data distribution is virtually imperceptible. Think of a surgeon conducting a delicate procedure on a patient located 8,000 kilometers away. Feedback of every tiny movement of the joystick on one continent that’s controlling the scalpel on another continent must be instantaneous. At a latency path rate of one microsecond or less, it is.

According to Qualcomm, one of the equipment players in 5G technology, this will quadruple data capacity over current systems by leveraging wider bandwidths and advanced antenna technologies. Qualcomm researchers estimate that by 2035 a broad range of industries – retail, education, transportation, entertainment, healthcare, and many others – could produce up to $12.3 trillion worth of goods and services enabled by 5G mobile technology that did not exist before.

And they estimate 5G will create up to 22 million new jobs worldwide.

That makes 5G’s economic contribution to the global GDP approximately equivalent to that of India, currently the world’s seventh largest economy.

5G: effects on timekeeping

There are many in the luxury watch community who are passionate about timekeeping precision, prizing the high-accuracy quartz watches created and/or used by Citizen, Breitling, Omega, Longines, and Grand Seiko. Each has an accuracy rating of +/- 10 seconds or less per year.

Longines and Grand Seiko say their high-accuracy quartz pieces are accurate to within +/- 5 seconds annually. These are autonomous (that is, they are self-contained) timepieces.

Grand Seiko 9F Quartz GMT

Seiko Watch Corporation president Shuji Takahashi sees 5G technology as having the greatest impact on smartwatches. He’s right.

Takahashi says, “Even before the smartwatches, the watch industry has gone through several exposures to new technologies such as cellphones and smartphones. Each time we face this, we concluded we should pursue only the essence of watchmaking. We are not an IT or telecommunications company. We should stay true to our nature as a watch manufacturer.”

Even as smartwatches gain in popularity, interest in traditional watches continues growing as well. That’s a good thing for Seiko. “People seek more value in traditional watches because they are more personal and emotional. If we one day decide to enter the smartwatch market, it has to stay true to Seiko’s watch philosophy: an independent watch with useful functions and no need for charging the battery with a connector,” Takahaski continues.

What about Grand Seiko’s instance of latest high accuracy, the 9F Quartz GMT?

Takahashi calls this latest offering the essence of watchmaking. He says the 9F covers all the negatives of a quartz movement. It is close to a mechanical watch in many respects, including bold and hefty hands and an instant calendar change.

The bottom line, at least for Grand Seiko and Seiko, is they have made the strategic decision to ignore the improved accuracy brought by the latest 5G technology. They will continue producing only autonomous timepieces not dependent on any outside timing source. Seiko believes this approach is accurate enough for their customers’ perceived purposes.

However, if absolute timing accuracy interests you, the most accurate timepieces are not the autonomous watches from Seiko and the other four brands discussed here, excellent as they are. Rather, they are the watches that are platforms for reporting the time from other sources.

Casio Wave Ceptor

Citizen’s Satellite Wave line of watches is one. Casio and Casio’s G-Shock lines employing the Wave Ceptor Technology are another. Both receive time signals from installations in Japan, China, the UK, Germany, and from Fort Collins, Colorado in the United States. The use of such transmissions ensures the absolute, most accurate time available.

Or does it?

What is the correct time exactly and where does it come from?

A good question, it turns out that the correct time is a matter of computation and technology. I rang up Andrew Novick at the U.S. National Institute of Standards and Technology (NIST) to learn more.

NIST has a spanking-new cesium fountain atomic clock called the NIST-F2 accurate to less than one second in 300 million years. With such precise equipment, official NIST time is used to timestamp hundreds of billions of dollars in U.S. financial transactions.

NIST time also flows to industry and the public through the Internet Time Service. More than eight billion automated requests per day come to NIST servers to synchronize clocks in computers and network devices around the world. NIST radio broadcasts update an estimated 50 million radio-controlled watches and clocks daily.

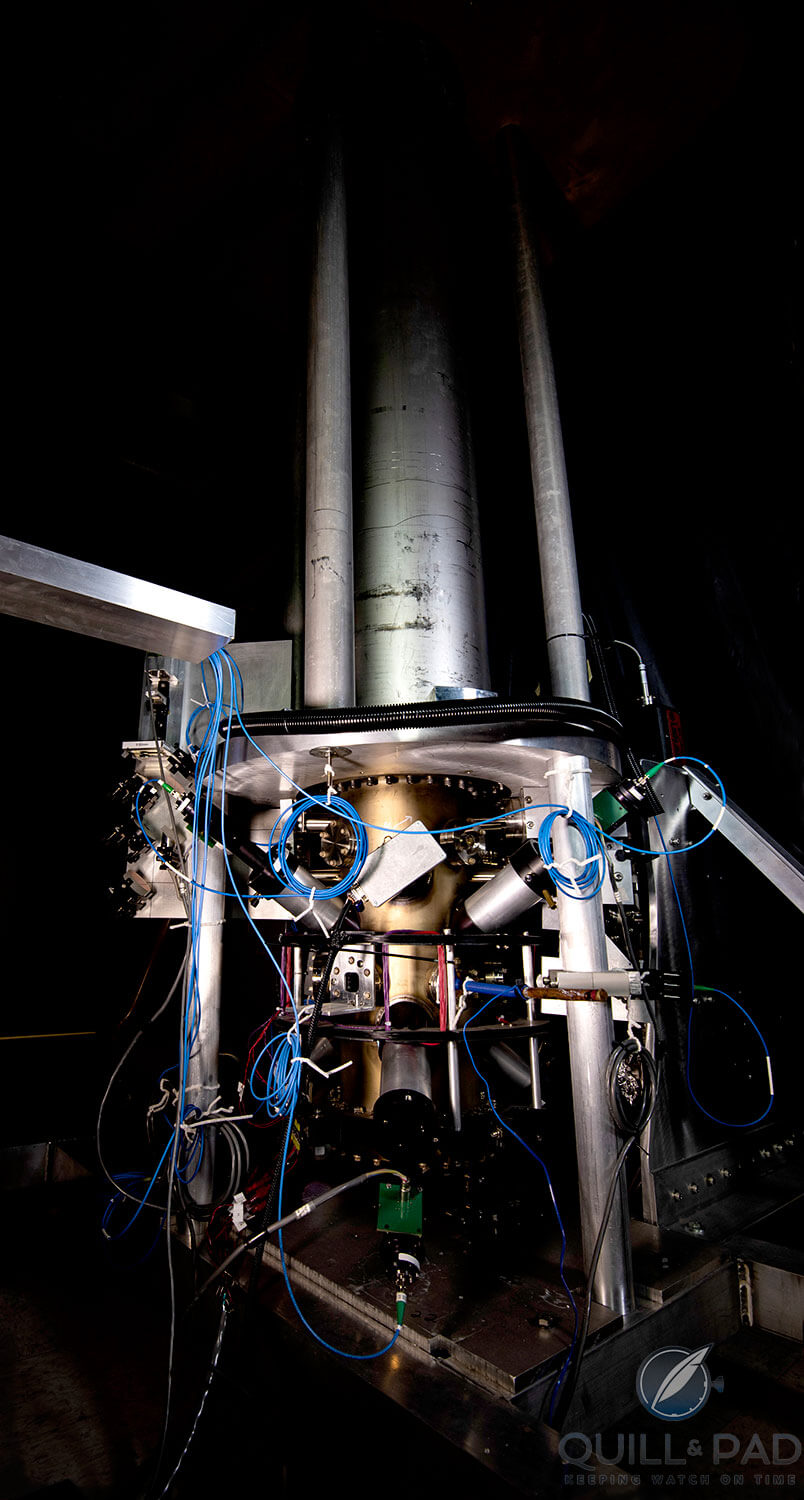

NIST F2 atomic clock (photo courtesy NIST)

Still, the NIST-F2, accurate as it is, does not provide what the world accepts as the correct time. That honor still goes to UTC: Coordinated Universal Time.

According to NIST’s Novick, “The NIST-F2 is just one of the time signals used to compute UTC along with time signals from the U.S. Naval Observatory’s atomic clocks. The time contributed by NIST-F2 and the USNO never differ from one another by more than 20 nanoseconds. From there UTC also polls 250-300 other certified time providers worldwide, then computes a weighted average of the combined times. The world recognizes that weighted average computation as the correct UTC time.”

Note also that the time maintained both by NIST and the USNO never differ from UTC by more than 0.0000001 seconds. That’s why NIST’s customers and the U.S. military, as well as the U.S.’s allies, treat them pretty much as the same time. And it is, unless accuracy out to 16 decimal places is important to you. Then NIST-F2 time is what you want.

Now what?

It isn’t enough to have the correct time; industry requires the correct time at the right time. When an application requires such timing precision, the question of getting the correct time to the end user is a critical issue. Cellular telephones, Global Positioning System satellite receivers, and the electric power grid are just a few users relying on the high accuracy of atomic clocks.

Although Apple Watch declined an interview for this article, my educated guess is that it gets the time from a provider such as NIST or UTC, puts it on its servers, and then makes it available to all the Apple Watches.

Therein lies the problem that 5G technology solves. Latency – that pesky time delay to request information, then receive it back from the source – can ruin the accuracy of a time signal. With 5G and its one-microsecond latency, that lag time virtually disappears. Or at least it can be measured in both directions and then netted out of the time signal to arrive at the correct time.

It gets a bit more complicated, though. Latency for the time request from the end user is one time. However, coming back from the source to the user may be something else entirely.

Don’t forget the internet sends data packets along a path – but the return trip is not always the same path as used when the data was requested. And that different path may take more or less time than the first.

So how to factor out that latency time from the actual time? The new 5G technology is so fast that it makes such transmission errors irrelevant.

5G: real world impact

Use of 5G technology in receiving time data greatly increases its accuracy. It may seem that I’m splitting hairs here. Not true.

Take for example a GPS location reading. Let’s give it a life-critical function such as targeting a Tomahawk cruise missile on a building 1,500 miles away. Targeting systems using GPS require absolutely precise time measurement.

According to Novick at NIST, an error as small as one nanosecond in the time used by GPS creates an error on the earth’s surface of one foot. The latency error created in the old 2G, 3G, or 4G cellular transmission systems could have produced GPS timing discrepancies of several hundred nanoseconds.

That’s more than enough to cause the missile to miss its targeted building and possibly hit another, non-combatant, building. Though 5G technology doesn’t eliminate such error, it dramatically reduces its likelihood – down to virtually nothing.

A word about Apple Watch and 5G

Apple has made the strategic decision to delay the launch of its 5G devices until 2020; I presume that includes the Apple Watch. That would put Apple well over a year behind its competitors Samsung, Huawei, Oppo, and Xiaomi, most of which are using Qualcomm’s 5G modem. Apple, however, has committed to using Intel’s XMM8161 chip set.

How does this affect the Apple Watch accuracy? From a technological standpoint, the difference is significant: a latency of 50 microseconds on the Apple Watch versus one microseconds on the competition with 5G.

However, as a practical matter to most of us, the difference is imperceptible. That’s what Apple is counting on.

Apple Watch Series 4

Still, so many of Apple’s customers are early adapters who demand the latest and greatest. Analysts say – at a price point exceeding $1,000 – they just may forego the 2019 upgrade without 5G and wait for 2020’s release.

With Apple’s fiscal 2018 revenue at $265.5 billion, it remains to be seen how significant such a decline in phone and watch sales could be to the company going forward.

5G: effects on the watch world

The next generation of luxury watch buyers will be younger people used to a falling latency in all of their computer interactions. With 5G’s one-microsecond latency, these luxury watch buyers are now used to real-time response with no latency-induced error. This has a profound impact on how we interact with all devices.

For example, securities traders working on Wall Street time stamp every trade they do – it’s a rule governed by the Securities Exchange Commission. The securities firm’s computers receive a time signal from a GPS satellite.

They then distribute the correct time to the actual trading computers using Precision Time Protocol (used to synchronize clocks throughout a computer network) on a LAN (local-area network), which achieves clock accuracy in the sub-microsecond range, making it suitable for measurement and control systems. Now they have the exact time for use in time stamping their trades.

But what about the trading counterparties at different securities firms? They get their time from the same source. As long as any latency between getting the correct time from the GPS satellite, sending it throughout the trading company, then time stamping each trade is consistent and can be factored out of the time stamped on the trade ticket, all is good.

The SEC can then determine with absolute certainty what happened, when it happened, and who is responsible. This is particularly useful in cases where securities brokers are front-running trades on their clients (placing their own trades fractions of seconds before those of their clients) to gain an illegal price advantage. With program-trading algorithms, this becomes a significant issue.

This is the level of precision such professionals work with. And those on Wall Street are not alone. Anyone doing precision remote operations such as surgeons, drivers of vehicles, drone pilots, battlefield commanders, police, fire, and people working with GPS systems or their results require such timing precision in their professions.

Are they likely to accept anything less precise in their personal lives? Some will; some won’t.

Suddenly, an otherwise beautiful watch like Breitling’s B50 Cockpit Super Quartz with an accuracy of +/- 10 seconds per year will be a laughably inaccurate antique to some.

My opinion is that people who demand the exact time on their wrist will wear an interactive platform watch – one that displays UTC or NIST time received from a 5G Internet link that is constantly connected.

Defining timekeeping devices

5G technology and the resulting constant connectivity will redefine timepieces and their uses. Today’s beautiful analog watches tell time to the exact second. For most personal applications as we know them now that is fine.

However, as Apple has foreseen, connecting an information device to the wrist opens an entirely new world of data – precisely accurate data – available at everyone’s fingertips. Timekeeping for such devices is only the most basic function. Yet, it is a function that requires absolutely accurate time to support the other services this device provides.

For example, five years ago I joked with a cardiologist friend about splurging on the heart-rate app for her Apple Watch. Of course, no such app existed then. It does now.

Further, it soon will be able to feed the results back to an individual’s medical team. Already the Apple Watch Series 4 can detect aberrant heart rates (AFib) and suggest you contact your doctor if it detects an abnormal AFib. Also, should you fall while wearing a Series 4 it can summon emergency medical aid autonomously.

I recently joked with my same friend about splurging on the MRI and CAT-scan apps for her new Apple Watch Series 4. They don’t exist – yet. If they should one day exist, a precise time stamp and signal will be essential.

The same goes for autonomous vehicles using GPS signals that require time accuracy out to the 16th decimal place. Using 5G technology, they can be constantly connected with virtually no latency error for data transmission. All this electronic equipment will one day be contained in a package the size of a cellular phone or perhaps a wristwatch.

Environmentally induced timing error

Our wonderful mechanical timepieces are limited in their precision. Yes, they’re incredibly accurate, but +/- 1 second a day or even 5-10 seconds a year is no longer considered accurate enough for some applications.

The causes for this inaccuracy are many. Friction of the components contained in the movement is one. Wrist position is another. Temperature variances affect mechanical equipment as do vibration and magnetic fields.

At the end of the day, even that gorgeous Patek Phillipe possibly adorning your wrist this very second is nothing more than mechanical equipment that is subject to environmentally induced timing error. In other words, the time it displays is wrong.

In my analysis, for those demanding absolute time accuracy environmentally induced timing error can be removed only by turning the watch into a time reporting device rather than a mechanical timer that actually tracks time all by itself. Doesn’t sound very romantic, does it?

But getting the time signal from NIST removes the effects of wrist position, temperature, and friction. Afterall, NIST’s F2 cesium atomic clock employs a vertical flight tube for the cesium atoms chilled inside a container of liquid nitrogen at minus 193ºC (-316ºF). This cycled cooling dramatically lowers the background radiation and reduces some of the very small measurement errors that make it accurate to 16 decimal places and use the natural resonance frequency of the cesium atom (9,192,631,770 Hz).

Atomic clocks are actually atomic oscillators. They provide a very, very stable frequency. Currently the state of the art uses caesium-133. The NIST-F2 measures the precise length of a second – the time it takes a caesium-133 atom in a precisely defined state to oscillate exactly 9,192,631,770 times.

The NIST-F2 provides the most precise base unit of modern timekeeping. When linked to a clock (or chronometer) this stable frequency tracks the duration between two points – such as seconds.

Therefore, NIST’s F2 all by itself doesn’t even know what time it is! Still, with its 9 billion oscillations per second the NIST-F2 provides a far more precise vibration frequency than does, say, IWC’s red gold Da Vinci Perpetual Calendar Chronograph ($40,200) at only 28,800 vph or just 4 Hz.

IWC Da Vinci perpetual calendar chronograph

The engineers at NIST will laugh when they read this. They would ask if the IWC can factor out the effects of background radiation using -316ºF liquid nitrogen. The answer is, by the way, no.

But then again NIST’s F2 can’t tell the time, the month, date, year, or even the day of the week. And at $10 million, the NIST-F2 doesn’t even come in a beautiful 18-karat red gold case.

5G: effects on watch collectors

Little in the world of hyper-accurate timepieces is likely to affect most of today’s watch collectors. Most collect and wear these beautiful pieces without much regard to the time of day they tell, anyway.

These are collector’s items, status symbols, memories of people who once wore them, and those who depended on their [relative] accuracy, where each has been, the work they did, and so much more. I get that.

The advancement of 5G connectivity and its effects on timekeeping is just another step in our evolution. Just like the chronograph, then the split-seconds chronograph, and now both the TAG Heuer Mikrograph and Zenith’s Defy El Primero 21, which each can measure elapsed time to one-hundredth of a second.

TAG Heuer chronographs through the ages

Still, the accuracy of either piece cannot compare with the time reported by a G-Shock GG1000-1A Mudmaster receiving hourly radio time corrections and that sells for $320 (less than one-tenth the price of the TAG Heuer and Zenith watches.

Casio G-Shock Mudmaster

The point is that the potential to more precisely measure time continues evolving. It is not just about the devices that measure time. It’s also about technological advances in measuring and delivering time that open new opportunities for our human culture.

Quick Facts NIST-F2 Cesium atomic clock

Functions: no hands, no display of hours, minutes, seconds or nanoseconds, just a very, very stable frequency of 9,192,631,770 Hz; when employing ancillary equipment can be used to report hours, minutes, seconds, nanoseconds, and microseconds out to 16 decimal places.

Case: requires an entire room, not easily transported

Movement: in-house created vacuum chamber with super-cold balls of atoms. Operated by computer with all sorts of proprietary software that we cannot even imagine

Price: priceless. Figure at least $10 million in development, subject to all manner of U.S. security export restrictions, end user certificates, taxes, landing fees, docking, and installation charges not included

You may also enjoy:

Leave a Reply

Want to join the discussion?Feel free to contribute!

In general I try to avoid complaining about inaccuracies in articles, as those can happen to anyone, but this whole piece should simply be removed to protect reputation of both the author and this website.

Let’s start with some physics. 1milisecond (ms) is 1/1000 of a second. 1 microsecond is 1/1000 of a millisecond (or 1 millionth of a second) and 1 nanosecond is 1/1000 of a millisecond or 10^-9 of a second. To put it differently, if you need microsecond precision, then millisecond will not help you much because it is literally 1000 times more coarse. It is even worse with nanoseconds.

Speed of light is, rounded above, about 300.000km/second or 3000km/ms. This remains our theoretical upper limit for achievable transfer speed. In practice it may be lower and certainly is when we talk about radio (which 5G is).

Operating on a patient 8000km away will give you a theoretical lower bound for latency slightly above 5ms (16.000km/3000). As it happens this should be fine as <10ms lag is generally considered imperceptible, but this obviously does not extend to every distance. Also, there is an implication in this article that 1ms is latency from anywhere to everywhere. For reasons mentioned in previous paragraph this obviously isn't true.

In practice not much will change regarding time keeping. There is nothing special about milliseconds as a unit. Our reaction times are too slow to accurately manually measure anything more precise than in hundreds of a second and most people neither need nor care about time even to that precision. They will certainly not go through an effort of getting or wanting anything more precise than their phone already gives them (or smartwatches).

Those of us, who do need more precise measurements, already commonly measure time in microseconds if not more precise so milliseconds latencies do not really fundamentally change anything. Mitigating timing errors may become a bit easier, but fundamentally will remain the same.

5G should bring many benefits and with them changes, but its impact on watch industry will not come on account of lower latency.

I would also welcome more skepticism regarding sources. Qualcomm is main producer of 5G equipment and one companies that may expect to benefit the most from a successful rollout of 5G. Their numbers should be taken with a pinch of salt.

Agreed – better just to ignore this article, which is technically incoherent. For a start, 5G latency is expected to be at best 1-2 MILLIseconds, not microseconds. Next, GPS targeting of missiles relies on the rubidium clocks in the satellites (which are sync’d to a ground-based caesium standard) to achieve positional accuracy, just like your iPhone does; the missile, like your phone, has a quartz clock, and the GPS chip in both cases solves for the error between it and the atomic clock – no 5G involved. Next, there is a ‘Precision Time Protocol’ defined by the IEEE 1588 standard for synchronizing clocks – see Wikipedia – this takes care of the latency between clocks. 5G won’t affect this protocol, or pretty much any aspects of time-keeping that you’ll notice.

Since the author went out of his way to conflate such different concepts as UTC, latency, precision, 5G and Apple Watches, maybe he should have researched a bit more and discovered IEEE 1588 which already does better than whatever 5G is supposed to do over regular networks today.

FWIW, the Best case 1 ms speed is from the end user to the local 5g node, not wherever the data is headed. Verizon’s SLAs from North America to Europe are <90 ms. 5g won’t alter physics, and even at the speed of light transfer of data from 8000km away is still bound by the speed of light (~37ms one way best case). Cool article, love the site and your stuff but some of your examples are a bit misleading.

The author incorrectly uses ms as an abbreviation for microseconds. The symbol ms is for milliseconds. The correct abbreviation for microseconds uses the Greek letter mu (when available on the keyboard) or us when it is not. All of the author’s references to microseconds should have read like 50 us, 1 us, and >1 us. This is especially important in an article that references both milliseconds (ms) and microseconds (us).

Thank you for that clarification. I will now change the text to ensure that “microseconds” and “milliseconds” are crystal clearly indicated.

Don’t forget, with 5G, we’ll also be hitting our stingy ISP’s data caps far more accurately. :/

Thanks for this wonderful thoughts.